WORKSHOP: MY AI IS BETTER THAN YOURS WS25

MODELS: PIX2PIX

Lecturer: Kim Albrecht Lars Christian Schmidt Yağmur Uçkunkaya

Winter 2025

Model Type: Conditional Generative Adversarial Network (cGAN)

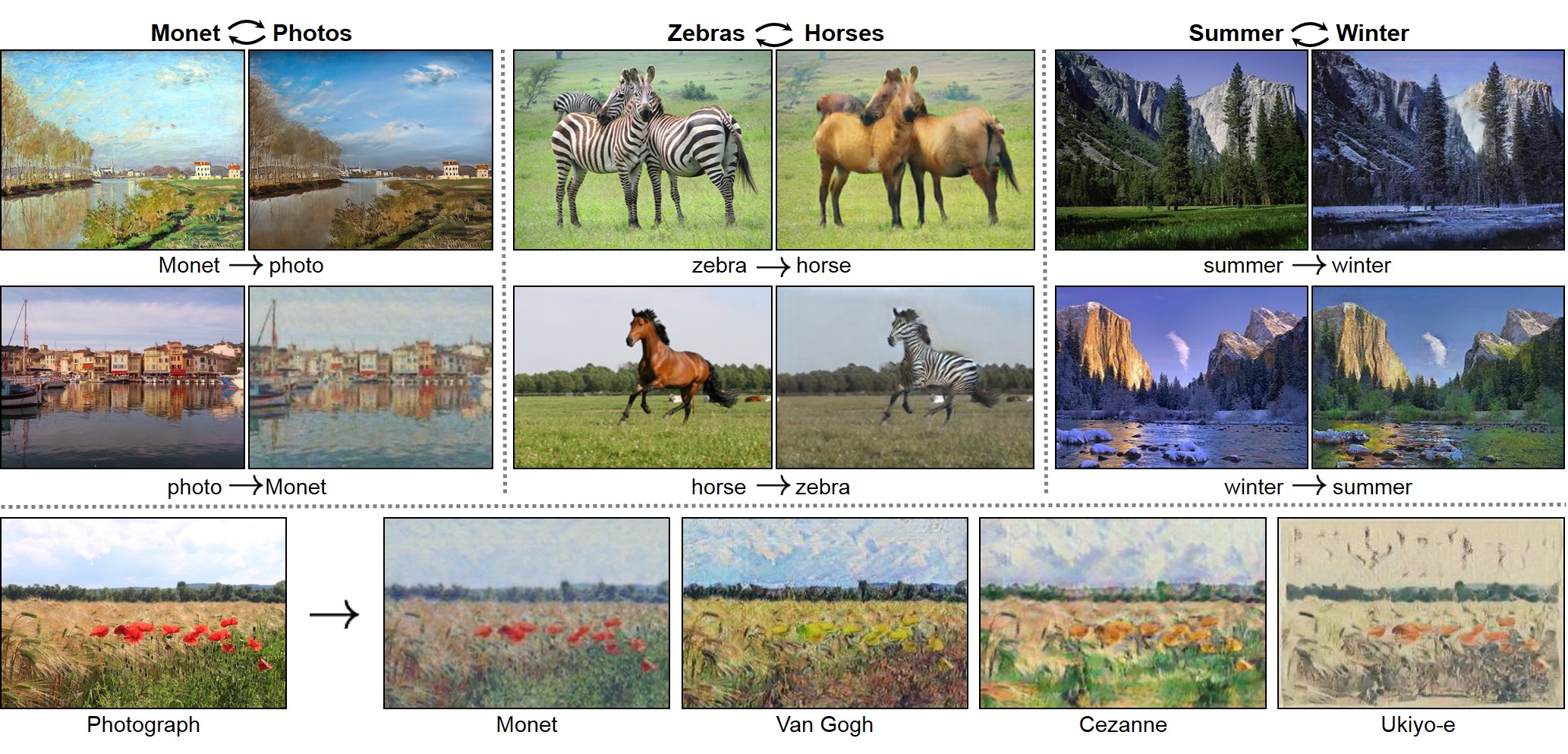

What it does: Turns one image into another image, based on pixel-to-pixel translation.

Media: Images

Pix2Pix is a machine learning model that learns how to turn images of one type into images of another type. It works especially well when the images are aligned — meaning the “before” and “after” images match in shape or layout. The model looks at hundreds of pairs and learns the translation pattern. Later, you can give it a new sketch — and it will try to create a realistic version based on what it has learned.

Workings

1. Pick a concept

Think of a transformation:

- Sketch → Object

- Drawing → Photo

- Doodle → Building

- Abstract shape → Fashion

- Daylight photo → Night version

2. Collect or create your data

- You need at least 50–100 image pairs. More is better, but even 20–30 can work (with strange results).

- The image pairs need to be aligned. For example, you draw on top of a photo, or always draw the same shape in the same spot.

3. Preprocess the data

- Resize all images to the same size (e.g., 256×256 pixels)

- Place image pairs side-by-side: left = input, right = target